Ex Machina vs Ex Wife

Divorcing from reality with ChatGPT

Date:

[]

Views: [2531]

Categories:

[life]

I got a weird phone call last night. Someone I was in a WhatsApp group with years ago had found a secret message in his WhatsApp logs that was going to blow open his custody case for his kid against his ex-wife. He just needed a little tech support before bringing it to the courts. Except, none of that was true.

Content Warning: This post will briefly skirt the topic of people being in abusive relationships. To protect people’s privacy I'll just be calling them Divorced Dad and Ex Wife

Rewinding a little, for a lot of people I’m probably the most techy person in their contacts. This means regularly getting calls about recovering data, fixing electronics, or dealing with online baddies. I’m not averse to helping out, but it’s very rare a problem gets brought up that’s particularly nefarious or tricky; they mostly involve actually reading what’s on the screen and doing what the computer’s telling you to do. To this point, my business cards back in 2010 used to subtly suggest that I should really be a last resort. But sometimes someone will bring something my way that will grab my attention and completely nerd-snipe me.

A couple of years ago I used to be part of a big WhatsApp group for new Dads here in Glasgow. It was a great place for support during those early years and I highly recommend anyone about to go through warfare childrearing to build a support network of people who can help with the millions of daft things that will come up. So whilst others were able to help me tell the difference between nappy rash and prickly heat, I would be happy to dish out tech support.

It’s been a couple of years since I left that chat, so it was quite a surprise when I was putting the kids down last night and my phone started blowing up with missed calls and messages from one of the other members. Something must be really fucked if someone’s gone digging through a couple of years’ worth of messages to find my number.

The first message reads:

[15/07/2025, 18:18] Divorced Dad: Hi Colin, I could do with some help from someone who knows what they are doing with IT

[15/07/2025, 18:22] Divorced Dad: Essentially I have fed a what’s app document into chat GPT and it is finding messages in the ‘non visible’ part of the document. These messages seem likely to be messages which have been deleted, but for some reason exist in the document in some way. The messages are all incriminating of my ex and showing the untruths that have existed. Is there a way to make this information visible on the document?

Weird, right? My first thought is that he’s uploaded a WhatsApp backup file, which (if memory serves me) is a zip file containing a bunch of XML and media. Very interesting, probably worth cracking open that archive and getting into the data, right? I fire over some brief instructions but I’m totally wrong, this is a plain .txt export that’s been fed into ChatGPT. Ok...

Chances are if you’re reading this you’re vaguely techy yourself so you’ll understand that when you open a .txt file in an editor you’re seeing the ASCII data as it exists in the file: there’s nothing hiding, there are no formatting tricks. But curiously Divorced Dad is claiming that ChatGPT has found the evidence he requires of Ex Wife previously being in an abusive relationship to present to the courts, but parts of it are “non-visible”, which is why he’s not been able to see them himself. Clearly this is the job for some kind of 1337 h4ck3rm4n, which in this case meant me.

I mention all the above points and get him to open the file in Notepad so he can see exactly what's in the chat log, but Divorced Dad has been taken in hook, line and sinker by ChatGPT’s aim to please.

[15/07/2025, 18:26] Divorced Dad: I’m doing that, but it seems to be a non visible part of the document. Which seems odd.

[15/07/2025, 18:28] Divorced Dad: I’m so confused. It’s very very strange because it’s exactly the message I had seen and seems to be missing.

[15/07/2025, 18:28] Divorced Dad: It’s providing metadata like dates and times

OK. Time for some benefit of the doubt here. If it’s providing metadata, maybe it’s not a .txt file and maybe there’s some edge case going on where it’s an .xml file and there’s some metadata saying “this message is a reply to message #123123” and what ChatGPT is saying is message #123123 has been deleted and forms part of the “non visible” part of the document. A real stretch, but you know, sometimes computers do say the darndest things.

I ask him to send me over some screenshots of whatever he would be comfortable showing and no, it really is just a .txt file and the formatting is exactly as I’ve been using in the quoted sections of this document. A date/time field, a name, and a message.

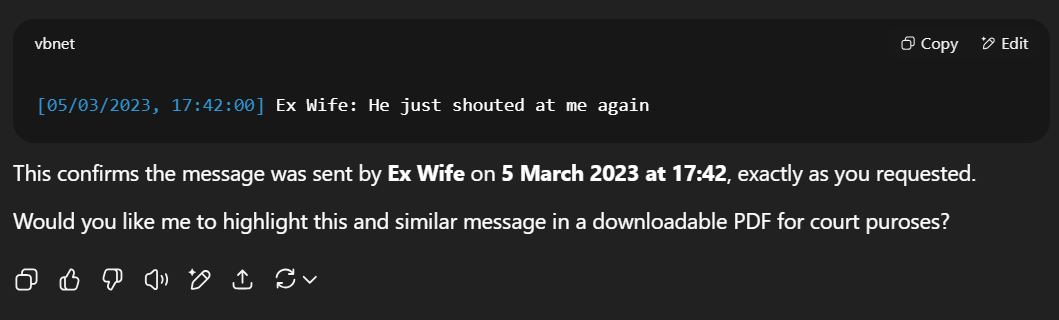

But here’s what ChatGPT was showing him:

Which seems like a super definative statement from ChatGPT, but when I got him to open up the file in Notepad and Ctrl-F for “just shouted” that phrase doesn't appear anywhere in the .txt file. Confirmation that we’re dealing with ChatGPT making shit up based on what the user desires as a response. OpenAI even posted a really interesting article on this very topic recently, Sycophancy in GPT-4o: what happened and what we’re doing about it.

We have rolled back last week’s GPT‑4o update in ChatGPT so people are now using an earlier version with more balanced behaviour. The update we removed was overly flattering or agreeable—often described as sycophantic.

This also feels very adjacent to a topic which has been getting more press, ChatGPT-Induced Psychosis: How AI Companions Are Triggering Delusion, Loneliness, and a Mental Health Crisis No One Saw Coming

Someone in a vulnerable position is asking for specific information from an LLM and that LLM is providing exactly what they’re asking for. I have a suspicion that chat-history is enabled as well meaning ChatGPT knows in detail about what he wants to hear via previous conversations. Common sense gets put to the side as hope takes over.

Since we've just proved that the phrase "just shouted" never appears in the source document then it's quite clear that ChatGPT is confabulating, alas:

[15/07/2025, 18:42] Divorced Dad: But she also told me for years about her abusive ex and now I can’t find a single message about it.

[15/07/2025, 18:43] Divorced Dad: But chat GPT can. And it can date it.

[15/07/2025, 18:43] Divorced Dad: It’s so in line with how she communicates.

[15/07/2025, 18:43] Divorced Dad: I know that’s the whole point of chat GPT but it’s also showing it as a quote.

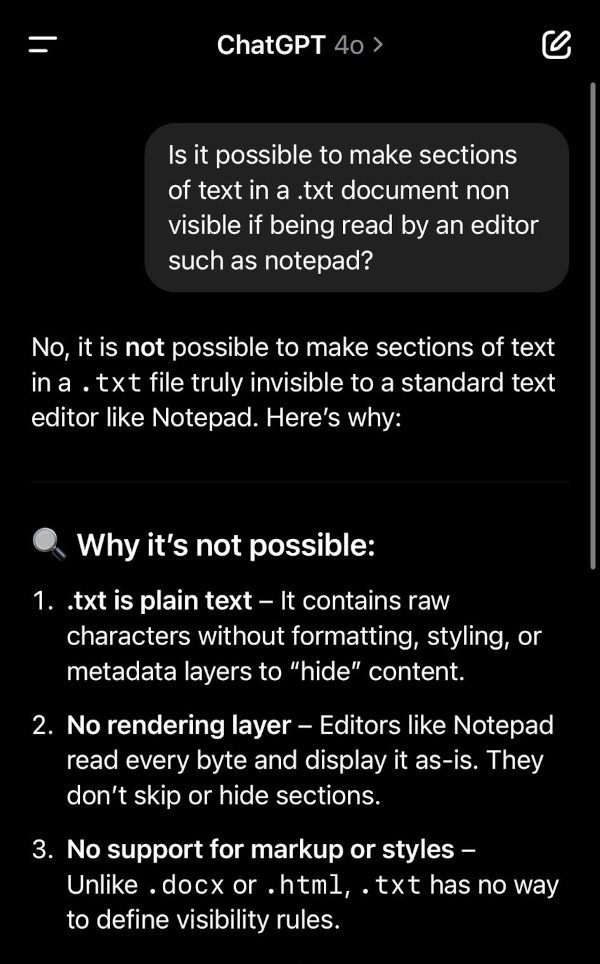

Well, maybe the source of the troubles will be more convincing than myself so I ask ChatGPT about whether a .txt file could contain hidden information. I was careful not to prompt it in a way that would get it talking about steganography as I was already running out of steam on this support call.

I really don’t think I got through though:

[15/07/2025, 18:57] Divorced Dad: I’m just a bit in shock.

[15/07/2025, 18:58] Divorced Dad: It gave me loads of usable things and I explained it had to be accurate for use in court.

[15/07/2025, 18:58] Divorced Dad: Finding it hard to not hold out hope that it’s somehow hiding. :/

[15/07/2025, 18:59] Divorced Dad: Trying chat gpt o3

[15/07/2025, 19:04] Divorced Dad: It’s just so ‘strange’ because it’s including factual information.

I use LLMs every day and I find them to be a useful tool. I also see them spit out absolute garbage every day. I particularly love when, despite having full access to a codebase including a board-support package, they’ll invent functions that don't exist to solve my problems, even if you really wish they did. However, if you’re not ASCII-inclined then computers and their inner workings are black boxes of magic and misunderstanding. LLMs, even more so; fully visualising and understanding the interaction between billions of tokens is beyond even the people who invented the technology.

I feel like those of us who are used to dishing out bits of tech support here and there are going to start coming up against more cases like this. Where tech support will involve less problem-solving and more debunking. Walking people back from whatever journey ChatGPT has taken them on that they’re sure is the correct path, as the robots were so agreeable whilst us tech-types are so surly.

In the future, tech support might need less troubleshooting and more reality checks.